Gap Mitigation

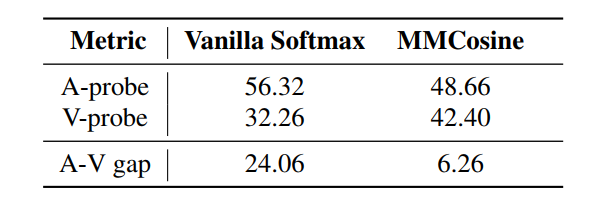

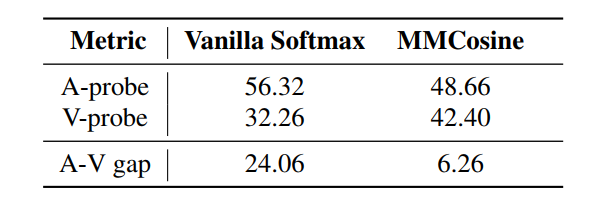

The performance gap of uni-modal encoders is reduced by MMCosine, with the weak modality and the joint model boosted.

Audio-visual learning helps to comprehensively understand the world by fusing practical information from multiple modalities. However, recent studies show that the imbalanced optimization of uni-modal encoders in a joint-learning model is a bottleneck to enhancing the model`s performance. We further find that the up-to-date imbalance-mitigating methods fail on some audio-visual fine-grained tasks, which have a higher demand for distinguishable feature distribution.

Fueled by the success of cosine loss that builds hyperspherical feature spaces and achieves lower intra-class angular variability, this paper proposes Multi-Modal Cosine loss, MMCosine. It performs a modality-wise $L_2$ normalization to features and weights towards balanced and better multi-modal fine-grained learning. We demonstrate that our method can alleviate the imbalanced optimization from the perspective of weight norm and fully exploit the discriminability of the cosine metric.

Extensive experiments prove the effectiveness of our method and the versatility with advanced multi-modal fusion strategies and up-to-date imbalance-mitigating methods.

The performance gap of uni-modal encoders is reduced by MMCosine, with the weak modality and the joint model boosted.

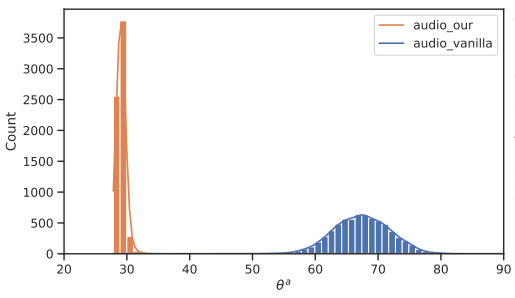

The learned angles between uni-modal features and ground-truth class centers become more compact. MMCosine can lower the intra-class angular variation and maximize the discriminability of cosine metric.

@article{ruize2023mmcosine,

author={Ruize, Xu and Ruoxuan, Feng and Shi-xiong, Zhang, and Di, Hu},

booktitle={ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

year={2023},

organization={IEEE},

}