Abstract

Generalizable articulated object manipulation is essential for home-assistant robots. Recent efforts focus on imitation learning from demonstrations or reinforcement learning in simulation, however, due to the prohibitive costs of real-world data collection and precise object simulation, it still remains challenging for these works to achieve broad adaptability across diverse articulated objects. Recently, many works have tried to utilize the strong in-context learning ability of Large Language Models (LLMs) to achieve generalizable robotic manipulation, but most of these researches focus on high-level task planning, sidelining low-level robotic control. In this work, building on the idea that the kinematic structure of the object determines how we can manipulate it, we propose a kinematic-aware prompting framework that prompts LLMs with kinematic knowledge of objects to generate low-level motion trajectory waypoints, supporting various object manipulation. To effectively prompt LLMs with the kinematic structure of different objects, we design a unified kinematic knowledge parser, which represents various articulated objects as a unified textual description containing kinematic joints and contact location. Building upon this unified description, a kinematic-aware planner model is proposed to generate precise 3D manipulation waypoints via a designed kinematic-aware chain-of-thoughts prompting method. Our evaluation spanned 48 instances across 16 distinct categories, revealing that our framework not only outperforms traditional methods on 8 seen categories but also shows a powerful zero-shot capability for 8 unseen articulated object categories with only 17 demonstrations. Moreover, the real-world experiments on 7 different object categories prove our framework's adaptability in practical scenarios.

Introduction

Generalizable articulated object manipulation is imperative for building intelligent and multi-functional robots. However, due to the considerable heterogeneity in the kinematic structures of objects, the manipulation policy might vary drastically across different object instances and categories. To ensure consistent performance in automated tasks within intricate real-world scenarios, prior works on generalizable object manipulation have been devoted to imitation learning from demonstrations and reinforcement learning in simulation, which consistently require substantial amounts of robotic data.

In this work, we delve into the problem of harnessing LLMs for generalizable articulated object manipulation, recognizing that the rich world knowledge inherent in LLMs is adept at providing reasonable manipulation understanding of various articulated objects.However, to fully leverage the rich world knowledge within LLMs for precise articulated object manipulation, we still confront the critical challenge of converting these abstract manipulation commonsense into precise low-level robotic control.

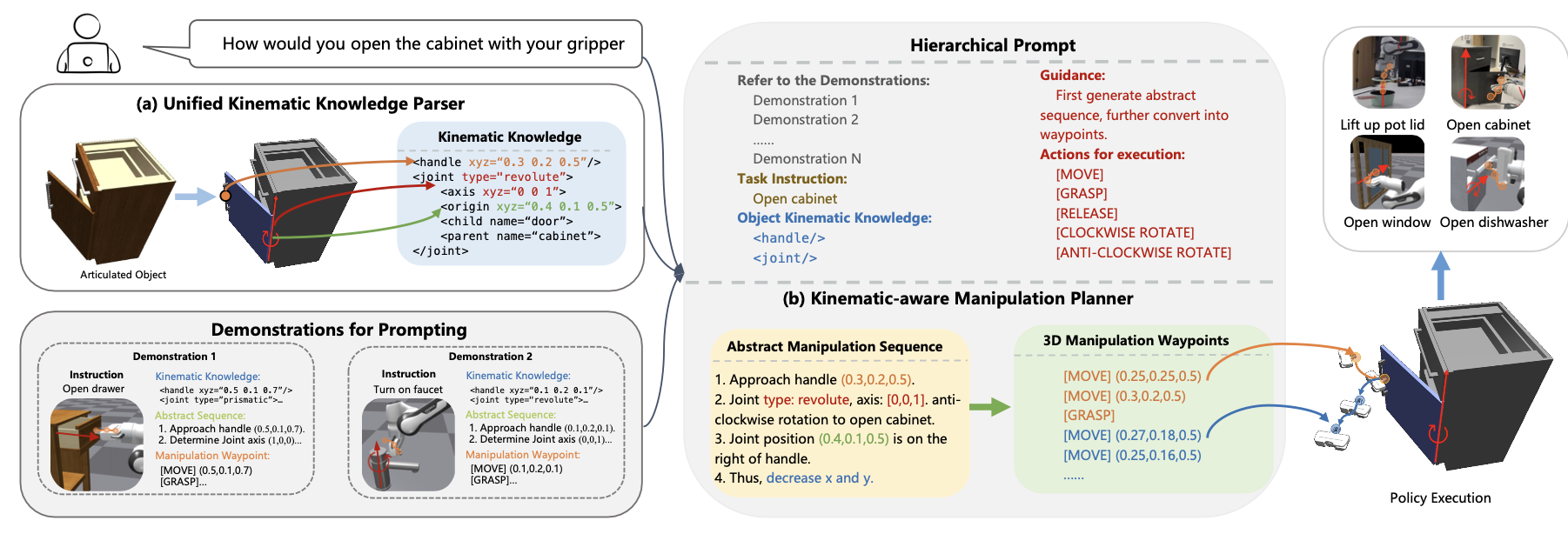

Figure 1: Our pipeline.

To tackle the aforementioned challenge, we propose a kinematic-aware prompting framework. This framework is designed to extract the kinematic knowledge of various objects and prompt LLMs to generate low-level motion trajectory waypoints for object manipulations as shown in Figure 1. The idea behind this method is that the kinematic structure of an object determines how we can manipulate it. Therefore, we first propose a unified kinematic knowledge parser shown in Figure 1(a), which represents the various articulated objects as a unified textual description with the kinematic joints and contact location. Harnessing this unified description, a kinematic-aware planner is proposed to generate precise 3D manipulation waypoints for articulated object manipulation via a kinematic-aware chain-of-thought prompting as demonstrated in Figure 1(b). Concretely, it initially prompts LLMs to generate an abstract textual manipulation sequence under the kinematic structure guidance. Subsequently, it takes the generated kinematic-guided textual manipulation sequence as inputs, and outputs 3D manipulation trajectory waypoints via in-context learning for precise robotic control. With this kinematic-aware hierarchical prompting, our framework can effectively utilize LLMs to understand various object kinematic structures to achieve generalizable articulated object manipulation policy.

Experiments

Dataset

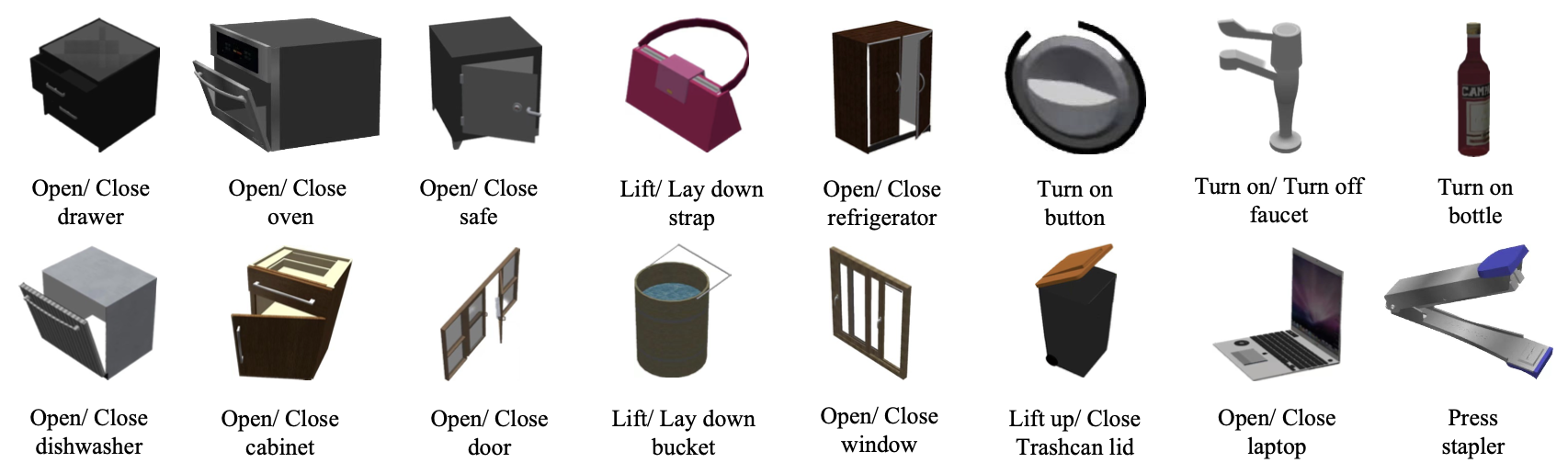

Figure 2: The illustration of the articulated objects used in our experiments. Each of these entities corresponds to either a singular or a pair of manipulation instructions.

To comprehensively evaluate the generalization capability of our framework, we first conduct experiments within the Isaac Gym simulator, utilizing 48 distinct object instances across 16 types of articulated objects from the PartNet-Mobility dataset. As shown in Figure 2, our evaluation dataset contains a broad spectrum of commonplace articulated objects, which covers the diversity of manipulation policies and articulated structures.

To further comprehensively measure the performance of different methods, we divide the dataset into two subsets. The first subset comprises objects from eight categories of provided demonstrations, but with diverse poses and instances. The second is devoted to object categories unseen from the demonstrations, which is more challenging for the LLMs' reasoning capability and commonsense. During the evaluation, we randomly place the object in a reachable position for the robotic arm.

Results

We compare our method with other approaches:

1. LLM2Skill: We implement LLM2Skill baseline as a variant of Code as Policy. We predefined 18 action primitives that could finish both the demonstrated and novel instructions. Here, LLMs would determine the suitable action primitive given the detailed object kinematic knowledge.

2. LLM2Waypoints: We implement this method as a naive attempt to directly output manipulation waypoints for articulated object manipulation without considering the kinematic knowledge.

3. Behavior Cloning: We train a language-conditioned behavior cloning algorithm on the demonstrations, leveraging the structure of Decision Transformer.

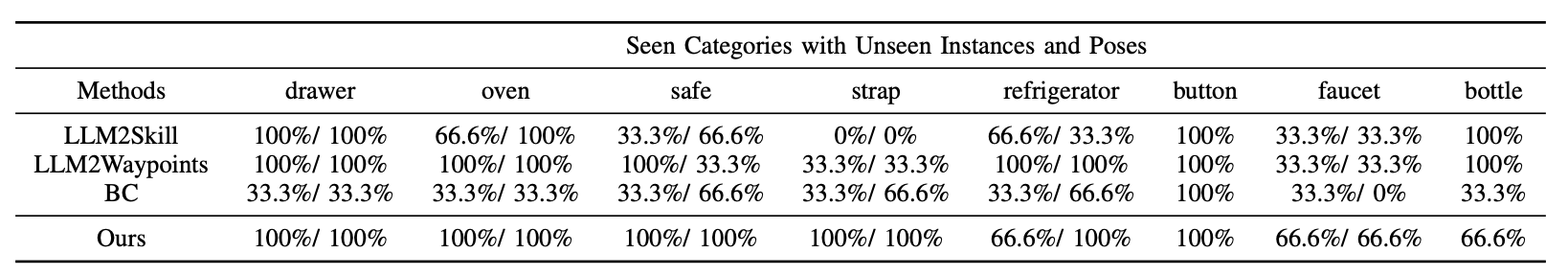

Table 1: Evaluation results on seen categories objects.

As shown in Table 1, we first evaluate the methods on seen categories, but with different poses and instances. Most LLM-based methods were able to exhibit considerable performance on these familiar categories, drawing strength from their robust in-context learning capabilities and a wealth of inherent commonsense knowledge.

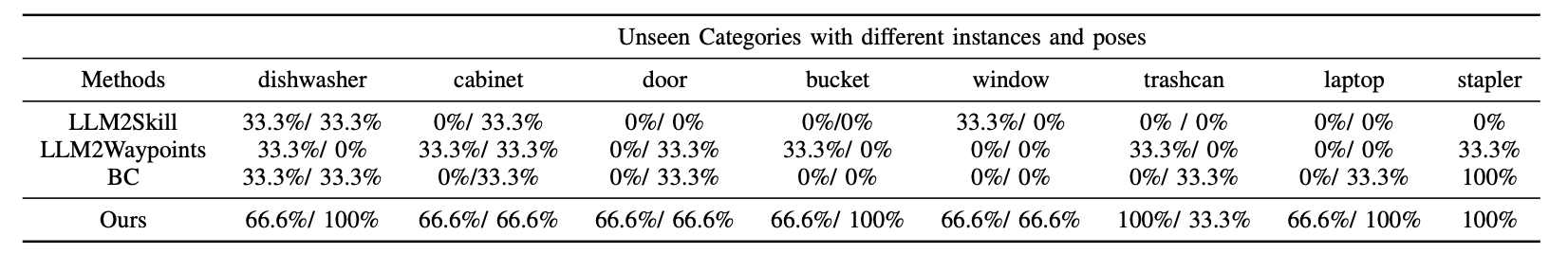

Table 2: aluation results on unseen categories objects.

We further extended our evaluation to objects within unseen categories. As shown in Table 2, LLMs could easily generalize to prismatic articulated objects like kitchen pots, given that the manipulation trajectory is a simple straightforward linear path. Conversely, when manipulating revolute articulated objects, these baseline models exhibit a notable decline in the average success rate. However, leveraging the comprehension of the object's kinematic knowledge provided by our unified kinematic knowledge parser component, our method is adept at manipulating these revolute objects.

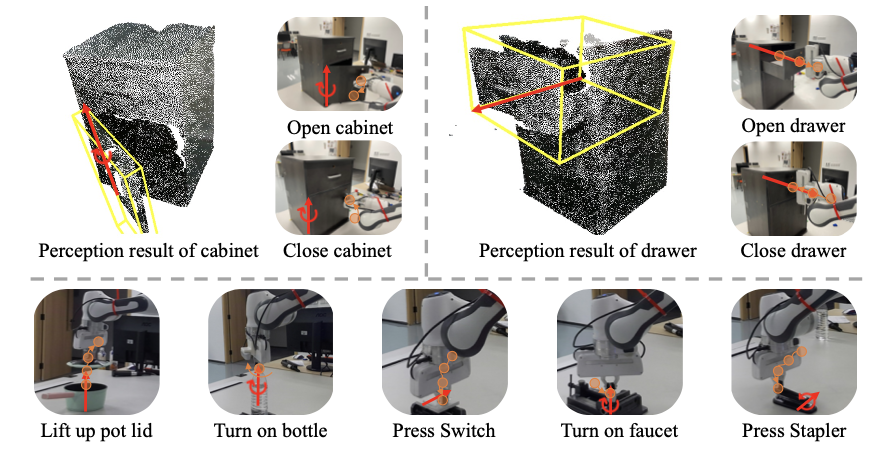

Real-world Experiment

Real-world experiments: we generate 3D manipulation waypoints with our framework for real-world object manipulation.

To demonstrate the effectiveness of our framework in practical scenarios, we conducted experiments with a Franka Panda robot arm in the real world. To convert the kinematic structure of the manipulation object into texture format with our unified kinematic knowledge parser, we first combine Grounding-DINO and Segment-anything to accurately segment the target object. We incorporate the GAPartNet as our backbone to detect actionable parts and capture joint information.

Conclusion

In this work, we propose a kinematic-aware prompting framework to utilize the rich world knowledge inherent in LLMs for generalizable articulated object manipulation. Based on the idea that the kinematic structure of an object determines the manipulation policy on it, this framework prompts LLMs with kinematic knowledge of objects to generate low-level motion trajectory waypoints for various object manipulations. Concretely, we build the unified kinematic knowledge parser and kinematic-aware planner, to empower LLMs to understand various object kinematic structures for generalizable articulated object manipulation via in-context learning. We evaluate our method on 48 instances across 16 categories, and the results prove our method could generalize across unseen instances and categories with only 17 demonstrations for prompting. The real-world experiments also prove our framework's generalization to practical scenarios.